There are tons of information and sample codes out there in the wild, but with the rapid pace of advancement in technologies over the year, many sample codes become old or not working anymore. I feel the results from the search engines were like archeological sites that contain different things from different eras. One has to sort it out to reason it. Since time flies quickly, this article will be outdated one day as well and be dumped into one of these archeological sites just like the others, but I’ll show you exactly what technologies I used to build the sample codes in details. We're going to build the latest token-based authorization / authentication for modern apps that are self-host and .NET focused solution. The objective is to show you how OAuth 2.0 authorization work from requesting the access token and use it to access protected API and then see the refresh token in action. Many security practices have omitted and we only show you the minimal code to achieve our objective and it cannot be used in the production environment as is. I assume you will do your own database and security strategies. Lastly, we will also show you how to use tool to communicate with our OWIN/OAuth solution and develop a simple console to interact with the host.

Is it just me?

What is OAuth 2.0?

One of the confusions about learning the OAuth or OWIN is not because of the OAuth itself but because of its flexibility. Great flexibility is sometimes not a good thing for beginners. Many components are created to work interchangeably in the OAuth framework, and many such components are open source with different names. By looking at the names themselves, one cannot comprehend its role in the framework. In this paper, we will be focusing on the Microsoft’s solution on OAuth.

Order your dinner to go tonight?

OWIN for .NET Developers

For examples, just for OWIN itself, there are names like Katana, Nancy, Jasper, Suave, Nowin, ACSP.NET, Freya, ASP.NET Web API, ServiceStack, HttpListener, and the list can go on and on. Some components are deprecated, and some belong in one of these components: Host, Server, and Middleware.

Katana is a collection of OWIN-compatible components that make the whole architecture. Our perceptions about host and server have changed. You should think Server and Host as functional components that serve other components in the architecture, rather than hardware server or IIS web server. The Host manages the whole environment from initiating to launching the process. An example of the server will be the authorization server that takes care of authorization and granting token at the end. The Middleware contains layers of various frameworks that manipulate the ins and outs of the properties in the pipelines. Each framework can be a function or act as a smaller application for a complex need, or this bare framework can be just a simple DelegatingHandler or a special Func Dictionary.

app.Use(ctx, next) => { await ctx.Response.WriteAsync(“<html><head></head><body>Hello guys!</body></html>”) });

where ‘app’ is the IAppBuilder in the Configuration() and ctx is the OwinContext(environment) and ‘next’ is AppFunc.

Fortunately, with Katana, we don’t need to write a lot of codes. When you install the System.Web.Http.Owin assembly, you can use UseWebApi method derived from WebApiAppBuilderExtensions class to complete our pipeline by binding middleware together. Because of the Web API’s host adapter design, it allows components to be arranged in a pipeline structure and allows decoupling of other components so every component in the Middleware can perform different tasks in a request or response. With an optional ‘scope’ properties, the developer can further scope certain APIs or Middleware layers to smaller tasks.

var config = new HttpConfiguration();

app.UseWebApi(config);

Please note that all methods used in the Middleware are all asynchronous task-based method. If there an error has occurred, it should immediately return an error response to the caller rather than continue to the next pipeline. The implementation of OWIN assumes that the communication is over an SSL/TLS connection. So we only set AllowInsecureHttp = true in the development environment when we are setup the OAuthAuthorizationServerOptions portion of the codes.

Let's start coding Katana

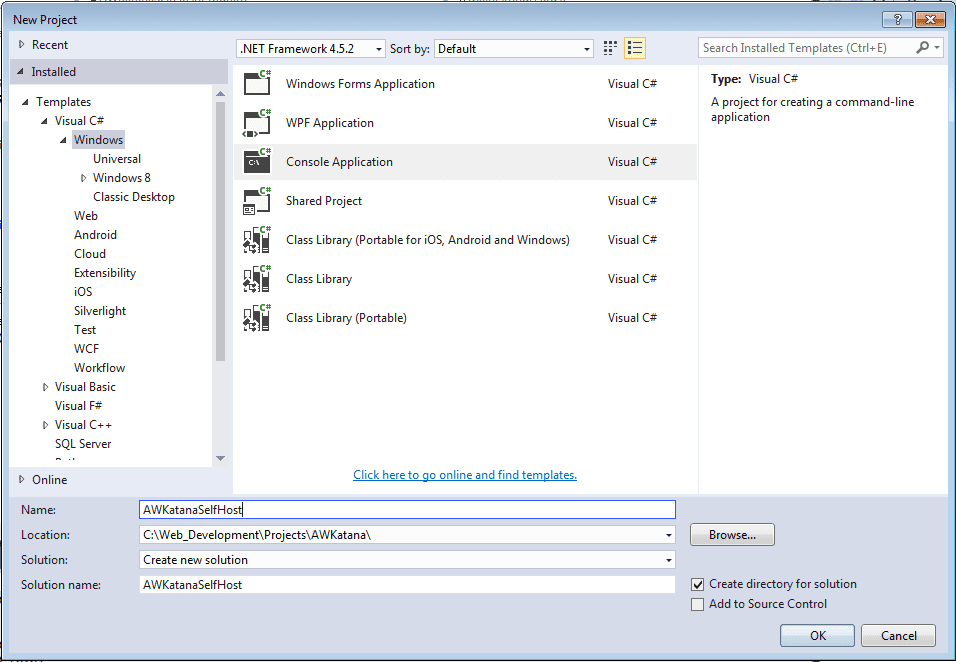

Visual Studio 2015 Community Version

Project Type: Windows Console Application with .NET Framework 4.5.2

Project Name: AWkatanaSelfhost

Package Install:

Microsoft.Owin.Host.Systemweb

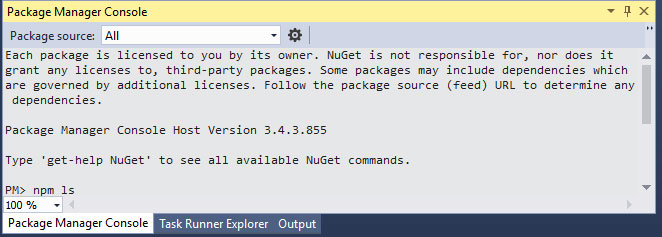

In Package manager console:

PM> Install-Package Microsoft.Owin.Host.Systemweb

Install-Package Microsoft.AspNet.Identity.Core

Install-Package Microsoft.AspNet.Identity.Owin

Install-Package Microsoft.Owin.Security

Install-Package Microsoft.Owin.Hosting

Install-Package Microsoft.AspNet.WebApi.Owin

Install-Package Microsoft.Owin.Host.HttpListener

1. Create a new project, Select Console Applicaton with .NET framework 4.5.2

static void Main(string[] args)

{

string baseUri = "http://localhost:8000";

Console.WriteLine("Starting web Server...");

WebApp.Start<Startup>(baseUri);

Console.WriteLine("Server running at {0} - press Enter to quit. ", baseUri);

Console.ReadLine();

}

public void Configuration(IAppBuilder app)

{

ConfigureAuth(app);

var webApiConfiguration = ConfigureWebApi();

app.UseWebApi(webApiConfiguration);

}

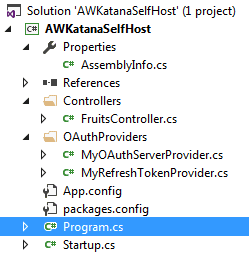

5. Create MyOAuthServerProvider class in the oAuthProviders folder. This class is the brain of the entire architecture, where it validates the incoming credential against the security data we have on the server. First, it analyzes the incoming data and determines if this is a new requestor for the access token or a return requestor who requests the renewal access token by using the refresh token. The ValidateClientAuthentication method will decipher the incoming data and determine the next action. If it receives user and password and the grant type is password, it will pass to GrantResourceOwnerCredential method for further verification and will determine to grant an access token or reject to the requestor. If it receives refresh token and the grant type is refresh_token, then the GrantRefreshToken method will receive the call from the ValidateClientAuthentication method, and then it will issue a brand new ticket containing the new access token when it is validated.

6. Create MyRefreshTokenProvider class. This class is self-explanatory where we implement the IAuthenticationTokenProvider interface from the OWIN.Security. Here we can customize our refresh token. In our project, we just create it as GUID data type.

7. Create a simple API called FruitController as our resource where the requestor can access our secret Fruit List after their credential has been verified and use the obtained token to access the API. There is no need to pass the username and password again when accessing the protected resource. The API can be protected by simply using the [Authorize] attribute in front the controller or individual method.

The Self-Host project is now completed with 7 simple steps.

The Secret Recipe of Refresh Token

grant_type: refresh_token

refresh_token: 3a3aebea-4150-4850-8e37-ace1d9eead9a [this is our sample and you may have other format]

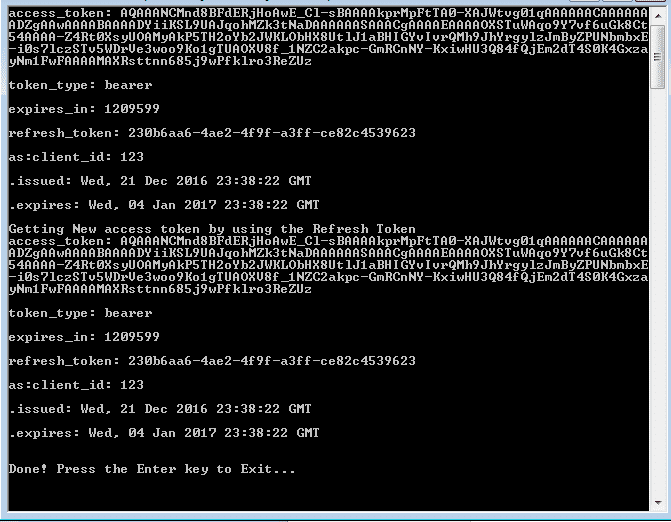

There are many ways to accomplish the same thing, but in our project, the trick is to have another authentication property called “as:client_id” hidden in the original ticket when the requestor requested for the first time. When the return requestor comes again with the refresh token and asks for a new access token, the ValidateClientAuthentication method can verify the clientId and clientSecret against the original ticket so that we are sure that this requestor is the original requestor. Without this trick, the GrantRefreshToken method will never receive the call even the grant_type and refresh_token parameters have been passed in. A generic error message, such as “invalid_grant” can be resulted, and you may not know why.

Create another .NET console project in another Solution, and we are going to build the Client that requests the access token to access the protected resources.

Project Type: Windows Console Application with .NET Framework 4.5.2

Project Name: AWkatanaClient

Package Install:

PM> Install-Package Microsoft.AspNet.WebApi.Client

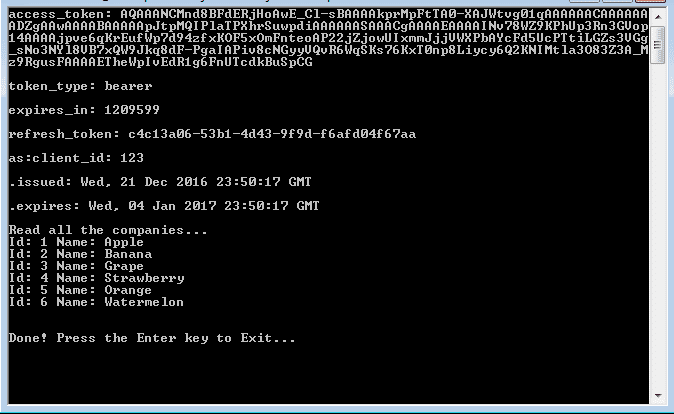

I’ve created two separate methods in the Main(). One is to demonstrate how refresh token works, and another method demonstrates how to access protected resource. You can comment out one of them to examine the mechanism of token generation and consumption. Please run the AWKatanaHost project first and then AWkatanaClient later in order to have a correct testing experience. See the results below:

How to use Postman to test your Katana Host?

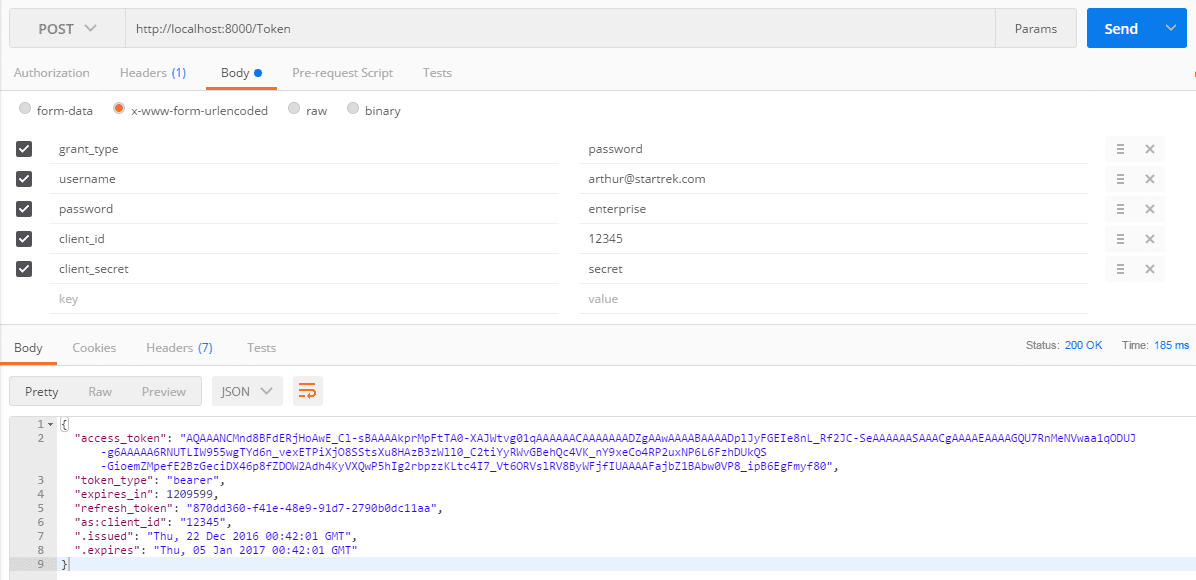

How to emulate a client requests for the access token for the first time?

2. On the url text box: http://localhost:8000/Token

3. Click on “Body” tab, and ignore “Authorization” and “Headers” tabs

3. Select radio button: application/x-www-form-urlencoded

4. Add the following keys and values

grant_type: password

username: arthur@startrek.com

password: enterprise

client_id: 12345

client_secret: secret

5. Click on the “Send” button

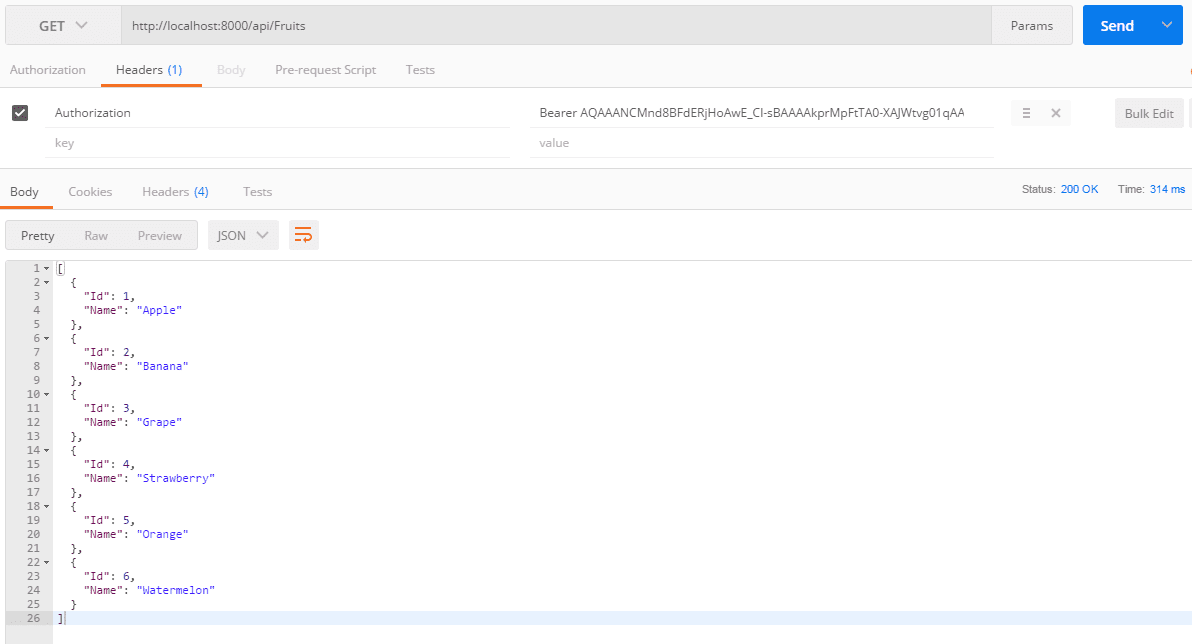

How to emulate a client to access the protected resources?

2. Create another tab and change to “POST”

3. On the url text box: http://localhost:8000/api/Fruits

4. Click on “Headers” tab, and put this key and value [Remember: in headers and NOT in body]

Authorization: Bearer AQAAANCMnd8BFdERjHoAwE_Cl-A..<---your access token code here

5. Click on the “Send” button

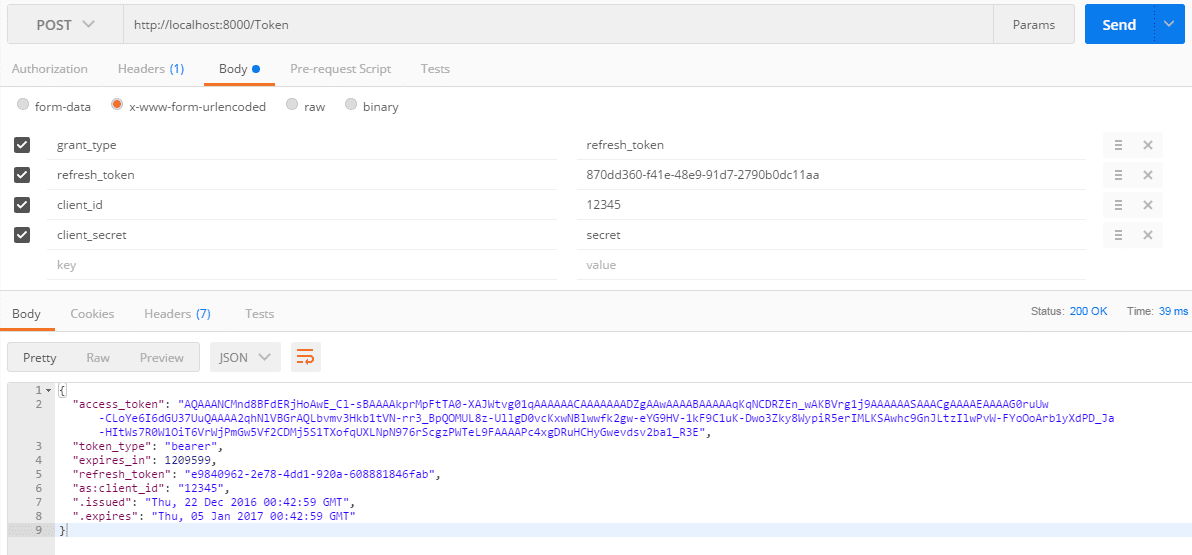

How to obtain the new access token from your refresh token?

2. Create another tab and change to “POST”

3. On the url text box: http://localhost:8000/Token

4. Click on “Body” tab, and put this key and value

grant_type: refresh_token

refresh_token: 870dd360-f41e-48e9-91d7-2790b0dc11aafrom step #1

client_id: 12345

client_secret: secret

5. Click on the “Send” button

Summary

Useful Links

Postman Tool - https://www.getpostman.com

OAuth 2.0 Official Standards- https://tools.ietf.org/html/rfc6749#section-4.3.2

RSS Feed

RSS Feed